William Dance, Lancaster University for The Conversatiom

Intentionally false news stories were shared more than 35m times during the 2016 US presidential election, with Facebook playing a significant role in their spread. Shortly after, the Cambridge Analytica scandal revealed that 50m Facebook profiles had been harvested without authorisation and used to target political ads and fake news for the election and later during the UK’s 2016 Brexit referendum.

Though the social network admitted it had been slow to react to the issue, it developed tools for the 2018 US midterm elections that enabled Facebook users to see who was behind the political ads they were shown. Facebook defines ads as any form of financially sponsored content. This can be traditional product adverts or fake news articles that are targeted at certain demographics for maximum impact.

Now the focus is shifting to the 2019 European parliament elections, which will take place from May 23, and the company has introduced a public record of all political ads and sweeping new transparency rules designed to stop them being placed anonymously. This move follows Facebook’s expansion of its fact-checking operations, for example by teaming up with British fact-checking charity FullFact.

Facebook told us that it has taken an industry-leading position on political ad transparency in the UK, with new tools that go beyond what the law currently requires and that it has invested significantly to prevent the spread of disinformation and bolster high-quality journalism and news literacy. The transparency tools show exactly which page is running ads, and all the ads that they are running. It then houses those ads in its “ad library” for seven years. It claims it doesn’t want misleading content on its site and is cracking down on it using a combination of technology and human review.

While these measures will go some way towards addressing the problem, several flaws have already emerged. And it remains difficult to see how Facebook can tackle fake news in particular with its existing measures.

In 2018, journalists at Business Insider successfully placed fake ads they listed as paid for by the now-defunct company Cambridge Analytica. It is this kind of fraud that Facebook is aiming to stamp out with its news transparency rules, which require political advertisers to prove their identity. However, it’s worth noting that none of Business Insider’s “test adverts” appear to be listed in Facebook’s new ad library, raising questions about its effectiveness as a full public record.

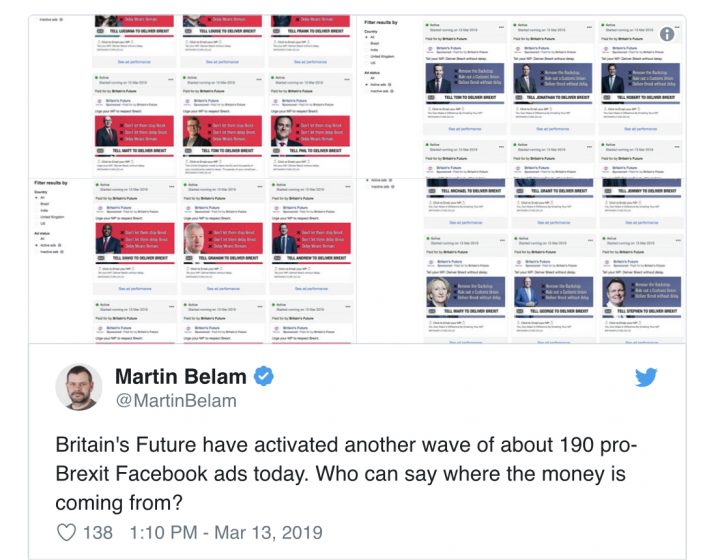

The problem is that listing which person or organisation paid the bill for an ad isn’t the same as revealing the ultimate source of its funding. For example, it was recently reported that Britain’s biggest political spender on Facebook was Britain’s Future, a group that has spent almost £350,000 on ads. The group can be traced back to a single individual: 30-year-old freelance writer Tim Dawson. But exactly who funds the group is unclear.

While the group does allow donations, it is not a registered company, nor does it appear in the database of the UK’s Electoral Commission or the Information Commissioner. This highlights a key flaw in the UK’s political advertising regime that isn’t addressed by Facebook’s measures, and shows that transparency at the ad-buying level isn’t enough to reveal potential improper influence.

The new measures also rely on advertisers classifying their ads as political, or using overtly political language. This means advertisers could still send coded messages that Facebook’s algorithms may not detect.

Facebook recently had more success when it identified and removed its first UK-based fake news network, which comprised 137 groups spreading “divisive comments on both sides of the political debate in the UK”. But the discovery came as part of an investigation into hate speech towards the home secretary, Sajid Javid. This suggests that Facebook’s dedicated methods for tackling fake news aren’t working as effectively as they could.

Facebook has had plenty of time to get to grips with the modern issue of fake news being used for political purposes. As early as 2008, Russia began disseminating online misinformation to influence proceedings in Ukraine, which became a testing ground for the Kremlin’s tactics of cyberwarfare and online disinformation. Isolated fake news stories then began to surface in the US in the early 2010s, targeting politicians and divisive topics such as gun control. These then evolved into sophisticated fake news networks operating at a global level.

But the way Facebook works means it has played a key role in helping fake news become so powerful and effective. The burden of proof for a news story has been lowered to one aspect: popularity. With enough likes, shares and comments – no matter whether they come from real users, click farms or bots – a story gains legitimacy no matter the source.

Safeguarding democracy

As a result, some countries have already decided that Facebook’s self-regulation isn’t enough. In 2018, in a bid to “safeguard democracy”, the French president, Emmanuel Macron, introduced a controversial law banning online fake news during elections that gives judges the power to remove and obtain information about who published the content.

Meanwhile, Germany has introduced fines of up to €50m on social networks that host illegal content, including fake news and hate speech. Incidentally, while Germans make up only 2% of Facebook users, Germans now comprise more than 15% of Facebook’s global moderator workforce. In a similar move in late December 2018, Irish lawmakers introduced a bill to criminalise political adverts on Facebook and Twitter that contain intentionally false information.

The real-life impact these policies have is unclear. Fake news still appears on Facebook in these countries, while the laws give politicians the ability to restrict freedom of speech and the press, something that has sparked a mass of criticism in both Germany and France.

Ultimately, there remains a considerable mismatch between Facebook’s promises to make protecting elections a top priority, and its ability to actually do the job. If unresolved, it will leave the European parliament and many other democratic bodies vulnerable to vast and damaging attempts to influence them.![]()

William Dance, Associate Lecturer in Linguistics, Lancaster University

This article is republished from The Conversation under a Creative Commons license. Read the original article.