The NHS has survived to the age of 70 and now costs the UK just over £120 billion per annum. Many advanced economies spend even more on healthcare per head of population. Why do fully paid-up capitalist nations persist with this Stalinist approach to healthcare?

Instinctively we might cite humanitarian and political reasons. But is this enough? Arguably access to food is a more fundamental human right, yet we don’t have a National Food Service.

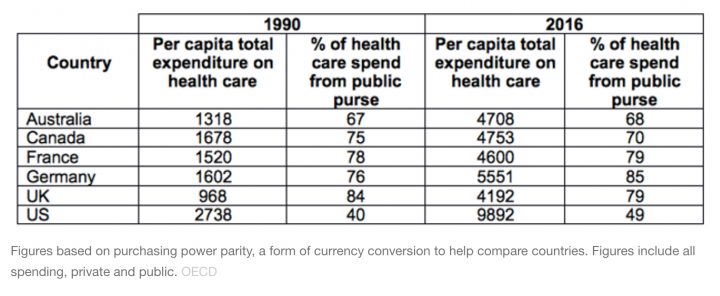

Governments do intervene in food markets, providing social security to poorer people to feed themselves – and we can debate whether it’s adequate. Generally, however, food is left to the market. In contrast, healthcare spending comes mainly from the public purse – see below.

Total health expenditure/state-funding

In the 1960s, the eminent economists Alan Williams and Dennis Lees held a correspondence about who should fund healthcare. Lees thought there was nothing to distinguish healthcare from other commodities, and thus prevent it being provided mainly via the free market. Williams drew on the analogy of the duck-billed platypus to disagree:

The argument you employ would run as follows … Many birds have duck-type bills, and lots of animals have furry bodies, and as for laying eggs, this is common in birds and reptiles, and all mammals suckle their young, therefore the duck-billed platypus, ‘would appear to have no characteristics which differentiate it sharply from other …’ etc. I hope my point is clear.

In other words, healthcare is unique, despite sharing individual characteristics with other types of expenditure. It’s this unique combination that makes it so problematic to provide via the market. Let me explain.

Insurance failure

Without government spending, an insurance market would develop to cover unpredictable health care needs. Thereafter, consumers and providers would no longer take costs into account when making healthcare decisions. This is the root of the ongoing cost inflation in US healthcare.

This is exacerbated by administrative costs from billing and advertising – they absorb one in four dollars of US health expenditure; it costs a lot to administer the market. Administration is a cost for public systems, too, but government funding keeps a lid on what can be spent. (Contrast this with the parts of the public health budget that are harder to control, such as drugs spending.)

A naïve observer might say, surely user charges could still put a ceiling on costs in a private system. In reality, charges reduce demand only among the poor (and less healthy). They don’t discriminate between needed and unneeded care – and don’t control total costs anyway, since the system simply concentrates its care-giving on those able to pay. This is why systems with the most user charges, notably France and the US, continue to struggle with costs.

Insurance also excludes the neediest. A well functioning insurance market tailors low premiums to those at low risk and higher premiums to those at higher risk. In health, those at higher risk tend to be less well off and unable to afford cover.

Because we as a society care that these healthcare needs are met, we see this as a market failure that justifies government intervention. The government intervenes in food markets for similarly altruistic reasons, though the failures are not comprehensive enough to warrant going further – food needs are more predictable and people know what they like.

Some countries try to care for their needy without a full publicly funded system. One option is a system of voluntary contributions, where anyone who pays an agreed regular amount can rely on it for medical cover – such as the Medicare and Medicaid systems for vulnerable groups in the US.

Yet before Obamacare, around at least one in six Americans were still inadequately insured. This subsequently dropped to more like one in eight, but has since been rising as the Trump administration attempts to unpick the reforms. The US has still not learned that taxation is the most effective way to ensure 100% coverage – leaving it as the only advanced economy in the world unable to do so.

Finally, markets work properly when consumers are well informed, which isn’t the case in healthcare. Since it’s so specialised, consumers instead rely on the fact that medics must attain certain qualifications before they receive a licence to practice. Once granted, the danger is that this literally becomes a licence to print money. To combat this requires what the great Canadian health economist Robert Evans once referred to as the “countervailing power” of government to negotiate with the professionals over pay and levels of provision.

The great survivor

As early as 1953, the UK Conservative government requested an inquiry into the cost of the NHS with a view to dismantling it. This backfired when the Guillebaud report of 1956 declared the NHS value for money. There are still many in government today who, as with other goods once thought the preserve of public provision, would still divest the NHS if they could. They cannot precisely because of how healthcare operates as a commodity.

I don’t mean to paint the NHS picture as rosy but, in reality, publicly funded healthcare is more efficient and more equitable. The UK is heading for a total health bill of £200 billion per annum, but even that is actually great value for money.

The US highlights the alternative – spend twice as much and fail to provide access for the whole population. Perhaps recognising healthcare as the duck-billed platypus of commodities, even Margaret Thatcher declared in 1989 that “the NHS will continue to be available to all … and to be financed mainly out of general taxation”.

With Theresa May’s recent early birthday present to the NHS, it is a case of déjà vu. It’s a reminder that health spending is as much a policy choice as a question of affordability.

More evidence-based articles about the NHS:

- The NHS explained in eight charts

- Is the 3.4% spending increase enough to ‘save’ the NHS?

What was healthcare like before the NHS?

What was healthcare like before the NHS?

Cam Donaldson, Yunus Chair in Social Business & Health, Glasgow Caledonian University

This article was originally published on The Conversation. Read the original article.