We republish here two related articles from The Conversation that show the need to remain vigilant of what social media is doing with the information we share.

Four ways your Google searches and social media affect your opportunities in life

Lorna McGregor, University of Essex; Daragh Murray, University of Essex, and Vivian Ng, University of Essex

Whether or not you realise or consent to it, big data can affect you and how you live your life. The data we create when using social media, browsing the internet and wearing fitness trackers are all collected, categorised and used by businesses and the state to create profiles of us. These profiles are then used to target advertisements for products and services to those most likely to buy them, or to inform government decisions.

Big data enable states and companies to access, combine and analyse our information and build revealing – but incomplete and potentially inaccurate – profiles of our lives. They do so by identifying correlations and patterns in data about us, and people with similar profiles to us, to make predictions about what we might do.

But just because big data analytics are based on algorithms and statistics, does not mean that they are accurate, neutral or inherently objective. And while big data may provide insights about group behaviour, these are not necessarily a reliable way to determine individual behaviour. In fact, these methods can open the door to discrimination and threaten people’s human rights – they could even be working against you. Here are four examples where big data analytics can lead to injustice.

1. Calculating credit scores

Big data can be used to make decisions about credit eligibility, affecting whether you are granted a mortgage, or how high your car insurance premiums should be. These decisions may be informed by your social media posts and data from other apps, which are taken to indicate your level of risk or reliability.

But data such as your education background or where you live may not be relevant or reliable for such assessments. This kind of data can act as a proxy for race or socioeconomic status, and using it to make decisions about credit risk could result in discrimination.

2. Job searches

Big data can be used to determine who sees a job advertisement or gets shortlisted for an interview. Job advertisements can be targeted at particular age groups, such as 25 to 36-year-olds, which excludes younger and older workers from even seeing certain job postings and presents a risk of age discrimination.

Automation is also used to make filtering, sorting and ranking candidates more efficient. But this screening process may exclude people on the basis of indicators such as the distance of their commute. Employers might suppose that those with a longer commute are less likely to remain in a job long-term, but this can actually discriminate against people living further from the city centre due to the location of affordable housing.

3. Parole and bail decisions

In the US and the UK, big data risk assessment models are used to help officials decide whether people are granted parole or bail, or referred to rehabilitation programmes. They can also be used to assess how much of a risk an offender presents to society, which is one factor a judge might consider when deciding the length of a sentence.

It’s not clear exactly what data is used to help make these assessments, but as the move toward digital policing gathers pace, it’s increasingly likely that these programmes will incorporate open source information such as social medial activity – if they don’t already.

These assessments may not just look at a person’s profile, but also how their compares to others’. Some police forces have historically over-policed certain minority communities, leading to a disproportionate number of reported criminal incidents. If this data is fed into an algorithm, it will distort the risk assessment models and result in discrimination which directly affects a person’s right to liberty.

4. Vetting visa applications

Last year, the United States’ Immigration and Customs Enforcement Agency (ICE) announced that it wanted to introduce an automated “extreme visa vetting” programme. It would automatically and continuously scan social media accounts, to assess whether applicants will make a “positive contribution” to the United States, and whether any national security issues may arise.

As well as presenting risks to freedom of thought, opinion, expression and association, there were significant risks that this programme would discriminate against people of certain nationalities or religions. Commentators characterised it as a “Muslim ban by algorithm”.

The programme was recently withdrawn, reportedly on the basis that “there was no ‘out-of-the-box’ software that could deliver the quality of monitoring the agency wanted”. But including such goals in procurement documents can create bad incentives for the tech industry to develop programmes that are discriminatory-by-design.

![]() There’s no question that big data analytics works in ways that can affect individuals’ opportunities in life. But the lack of transparency about how big data are collected, used and shared makes it difficult for people to know what information is used, how, and when. Big data analytics are simply too complicated for individuals to be able to protect their data from inappropriate use. Instead, states and companies must make – and follow – regulations to ensure that their use of big data doesn’t lead to discrimination.

There’s no question that big data analytics works in ways that can affect individuals’ opportunities in life. But the lack of transparency about how big data are collected, used and shared makes it difficult for people to know what information is used, how, and when. Big data analytics are simply too complicated for individuals to be able to protect their data from inappropriate use. Instead, states and companies must make – and follow – regulations to ensure that their use of big data doesn’t lead to discrimination.

Lorna McGregor, Director, Human Rights Centre, PI and Co-Director, ESRC Human Rights, Big Data and Technology Large Grant, University of Essex; Daragh Murray, Lecturer in International Human Rights Law at Essex Law School, University of Essex, and Vivian Ng, Senior Researcher in Human Rights, University of Essex

This article was originally published on The Conversation. Read the original article.

What Facebook isn’t telling us about its fight against online abuse

Laura Bliss, Edge Hill University

Facebook has for the first time made available data on the scale of abusive comments posted to its site. This may have been done under the growing pressure by organisations for social media companies to be more transparent about online abuse, or to gain credibility after the Cambridge Analytica data scandal. Either way, the figures do not make for pleasurable reading.

In a six-month period from October 2017 to March 20178, 21m sexually explicit pictures, 3.5m graphically violent posts and 2.5m forms of hate speech were removed from its site. These figures help reveal some striking points.

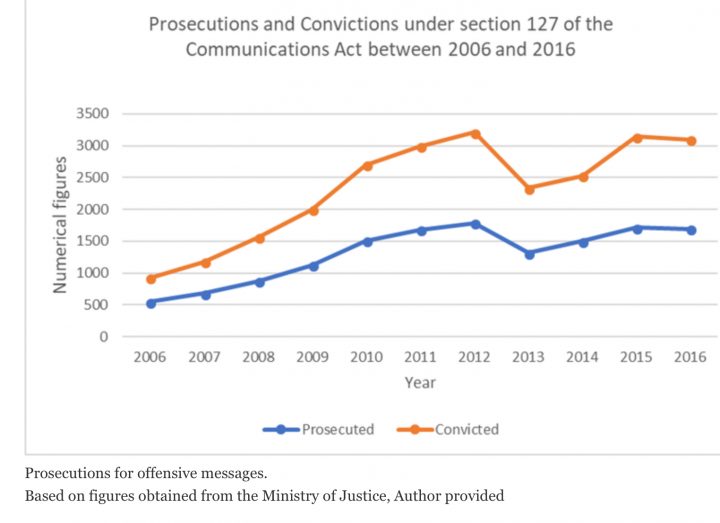

As expected, the data indicates that the problem is getting worse. For instance, between January and March it was estimated that for every 10,000 messages online, between 22 and 27 contained graphic violence, up from 16 to 19 in the previous three months. This puts into sharp relief the fact that in the UK, prosecutions for online abuse have been decreasing, as demonstrated in the graph below.

Yet what Facebook hasn’t told us is just as significant.

The social network has been under growing pressure to combat abuse on its site, in particular, the removal of terrorist propaganda after events such as the 2017 Westminster attack and Manchester Arena bombing. Here, the company has been proactive. Between January and March 2018, Facebook removed 1.9m messages encouraging terrorist propaganda, an increase of 800,000 comments compared to the previous three months. A total of 99.5% of these messages were located with the aid of advancing technology.

At first glance, it looks like Facebook has successfully developed software that can remove this content from its server. But Facebook hasn’t released figures showing how prevalent terrorist propaganda is on its site. So we really don’t know how successful the software is in this respect.

Removing violent posts

Facebook has also used technology to aid the removal of graphic violence from its site. Between the two three-month periods there was a 183% increase in the amount of posts removed that were labelled graphically violent. A total of 86% of these comments were flagged by a computer system.

But we also know that Facebook’s figures also show that up to 27 out of every 10,000 comments that made it past the detection technology contained graphic violence. That doesn’t sound like many but it’s worth considering the sheer number of total comments posted to the site by its more than 2 billion active users. One estimate suggests that 510,000 comments are posted every minute. If accurate, that would mean 1,982,880 violent comments are posted every 24 hours.

To make up for the failures in its detection software, Facebook, like other social networks, has for years relied on self-regulation, with users encouraged to report comments they believe should not be on the site. For example, between January and March 2018, Facebook removed 2.5m comments that were considered hate speech, yet only 950,000 (38%) of these messages had been flagged by its system. The other 62% were reported by users. This shows that Facebook’s technology is failing to adequately combat hate speech on its network, despite the growing concern that social networking sites are fuelling hate crime in the real world.

How many comments are reported?

This brings us to the other significant figure not included in the data released by Facebook: the total number of comments reported by users. As this is a fundamental mechanism in tackling online abuse, the amount of reports made to the company should be made publicly available. This will allow us to understand the full extent of abusive commentary made online, while making clear the total number of messages Facebook doesn’t remove from the site.

Facebook’s decision to release data exposing the scale of abuse on its site is a significant step forward. Twitter, by contrast, was asked for similar information but refused to release it, claiming it would be misleading. Clearly, not all comments flagged by users of social networking sites will breach its terms and conditions. But Twitter’s failure to release this information suggests the company is not willing to reveal the scale of abuse on its own site.

![]() However, even Facebook still has a long way to go to get to total transparency. Ideally, all social networking sites would release annual reports on how they are tackling abuse online. This would enable regulators and the public to hold the firms more directly to account for failures to remove online abuse from their servers.

However, even Facebook still has a long way to go to get to total transparency. Ideally, all social networking sites would release annual reports on how they are tackling abuse online. This would enable regulators and the public to hold the firms more directly to account for failures to remove online abuse from their servers.

Laura Bliss, PhD candidate in social media law, Edge Hill University

This article was originally published on The Conversation. Read the original article.